Ever since Blog and Wap created the first two cave-computers (Blog in a high-tech development pueblo out in the wilds of Boca Raton, Wap in the small California cave where he stored his wheel), there have been benchmarks.

In this article, I'm going to introduce you to some standard benchmarks currently making the rounds in the IT industry, and then I'm going to try to relate them to the world of midrange computers and specifically to the iSeries, RPG, and native DB2/400. I'll explain a little bit about what benchmarks do and what the pitfalls are that surround any attempt to compare two different computers, no matter how simple the test.

I'll address some of the problems of "sponsored tests" and the paid pundits who run them, and I'll try to help you determine exactly how big a grain of salt you'll need when reviewing the results. I'll also take a short sidetrack into the almost mystical world of "case studies" and "white papers." I'll try to keep that part short; those who know me know it's easy for me to go off into a rant about these folks, and really, there's little we can do except to laugh at them.

More productively, I'll get into some specifics about the iSeries and talk about how "proper" techniques can be completely different, depending on exactly what you are testing. No single methodology can handle everything, but you need to know what the assumptions are in any test to be able to determine its validity in your business.

Today's Industry Standard Benchmarks

There are a couple of primary players in the benchmark game today. The Transaction Processing Performance Council (TPC) is probably the oldest organization and, in my opinion, the most balanced and impartial. Although their tests were at least partially inspired by the old TP1 tests from IBM, the first TPC-A test was really an outgrowth of the DebitCredit test, with a lot of honest thought given to how to make these tests fair. For an excellent insight into what can make or break a benchmark, I recommend reading Omri Serlin's account of the history of the TPC and those first tests. This is a guy with a lot of credibility.

The other major test organization today is SPEC, the Standard Performance Evaluation Cooperation. The primary difference between the two groups is that SPEC focuses more on CPU-level measurements. For example, the new version of the Web server tests relies on a simulated back-end. Other tests include tests of NFS file systems and of graphics performance. The JVM tests are all designed to measure basic machine-level functions like the JIT compiler or floating-point arithmetic. The TPC tests, on the other hand, are intended to exactly model the entire transaction stream, from front to back. Not only that, TPC tests require external auditing, which is probably a primary reason that SPEC tests have achieved more participation than TPC tests. The SPEC organization recently lost its long-time guiding light when its president of a decade and a half, Kaivalya Dixit, passed away in November. I will be interested to see what effect this might have on the direction of the organization as a whole.

Here's an interesting note for those of you concerned about raw performance. The SPEC JBB2000 test compares Java Virtual Machine (JVM) execution pretty much at the bare metal level, doing CPU-intensive tasks. The result is a raw performance number, which is reported along with the number of CPUs used to generate the number (nowadays, they actually report the number of CPU cores, to properly measure chips with multiple cores per chip). If you divide the raw performance by the number of CPU cores, the top 10 machines are smaller machines with four or fewer cores (the one exception was an 8-way p5 Model 570). I think this is reasonable; the more CPUs you have, the higher your overall number but the lower your per-CPU number due to the overhead of managing the multiple CPUs. The interesting thing was that all of the machines except two were xSeries or p5 boxes. But even cooler was the performance of big boxes. For anything with 16 or more CPUs, the top three performers were pSeries boxes--a couple of Model 570s (one each on AIX and Linux) and a model 595 on AIX. But the real shocker was number four: a 16-way (32 core) i5 Model 595!

So, if you want Java performance in a little box, it's IBM xSeries or p5; and for a big box, it's p5 or i5. Who'd'a thunk it?

One other note about the tests: By far, the overwhelming favorite choice for JVM is IBM's JRE 1.4.2. The i5 test was reported last October using JRE 1.4.2. There were several older iSeries benchmarks posted, all back in 2000, all using JREs 1.3.0 and older. And every one was horrible, with numbers roughly one-eighth that of the newer JRE. I've said over the years that the iSeries JVM wasn't very fast, and evidently I was right. But according to these numbers, that may no longer be the case. And for those of you who have managed to get decent performance out of the box in these bad years, you may be in for an extraordinary performance boost with the newer boxes.

Benchmarking vs. Benchmarketing

Even back in the beginning, benchmarks were suspect. First, there's the idea of who is running the benchmarks. If Blog sponsors a test and the BlogPC outscores the iWap, is anybody really surprised? I didn't think so. The only surprise would be if the iWap outscored the BlogPC, at which point I'd predict a spike in iWap sales as well as an immediate opening in the BlogPC testing department.

This extends to the case studies and white papers you'll see from "independent" sources such as Forrester, Gartner, and the ITAA. I'm not going to go into great detail, but if you see something like "in a report commissioned by Microsoft, Forrester Research found that ...", I don't think you'd be paranoid to assume that Forrester found exactly what Microsoft wanted them to find. Similarly, since the ITAA is fundamentally the PR arm of large IT corporations, they're going to say whatever will best fatten the bottom line of their sponsors. Note that many of these organizations have serious misconceptions when it comes to the real industry of software development; for example, the ITAA considers the key feature of open-source software to be "the ability to modify the source code." This misses several points, including the fact that iSeries customers have been able to modify their source code since the earliest days of the platform and that the key issue in open source is licensing, not modification.

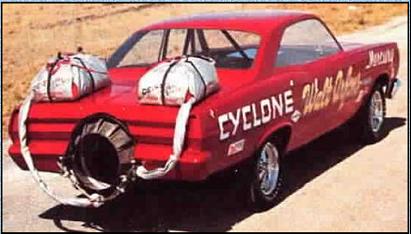

Then there's the concept of "tuned" machines, where the contestants are configured to eke out every last iota of performance for that particular test, even though the computer is then useless for just about any normal purpose. It's like funny cars vs. stock cars; the funny cars are basically just a fiberglass shell around a machine that has nothing to do with the original stock car. You can't drive it to the grocery store, but you can hit 300 mph in the quarter mile.

Figure 1: Here's a 1967 Mercury Comet "configured" for a TPC-C test.

Another component of benchmarks is the mathematics involved. If you have more than one test run, then you immediately start getting into the mathematical esotery of statistical analysis. How many runs are being made? What are the maximum and minimum run times, and what explains the difference? What is the average time? What is the median? Do you throw out outliers? It quickly gets confusing.

Finally, you have to take into account the test itself and what is actually being tested. It depends on the test, but let's look at a simple database performance test. When you read the database, is information being cached? Does the test run better with one job at a time or multiple jobs running simultaneously? (This last question is of crucial importance for the real world, since chances are you won't be dedicating a single machine to each of your production tasks.)

With all this negativity, you might get the idea that I don't like benchmarks, but that's not true. Used correctly, I think benchmarks are a great tool, no matter how primitive. At the very least, benchmarks can point to problem areas in a design, and run properly, they can help you avoid potentially troublesome design decisions--or at least make sure you're making those decisions based on facts rather than hype. De-hyping the hype is one reason why I started the IAAI Web site, which I'll get back to a little later. There are also some excellent online articles addressing these very issues; a favorite of mine is on the Dell Web site.

Testing on the iSeries

So how does all this relate to the iSeries? Well, we have to realize that the iSeries tends to do things differently than any other machine. Some things it does a little differently, some things a lot differently, but in either case, we need to be very careful when testing to make sure that we create a level playing field if we want to test against other platforms. At the same time, the incredible flexibility of the iSeries means that even on the same box, there are many ways of doing something. We need iSeries-only tests to determine which of the many options is the best for a given situation.

In my opinion, iSeries-only tests are more important than tests against other platforms. For example, one of the big problems we face as iSeries developers is the unfounded belief that other platforms perform better than the iSeries. I'm sure you've heard people say how much faster a program is when using SQL Server than the same program is when accessing an iSeries database. The problem is that the comparison is typically between some highly tuned SQL Server connection and some Visual Basic application using a standard ODBC connection to access a few records on the iSeries. This is hardly a fair test. Call a server program on the iSeries using the Java toolbox, or talk to a socket, or even use an RPG-CGI program, and I'll show you just how fast I can return a record. So, before we can compare the iSeries to other platforms, we first need to know the best way to write programs for the iSeries. Only then can we compare the iSeries in its best light.

Another bizarre concept I've heard recently is that Java is as fast as RPG at processing business rules, even when both are running on the iSeries. And despite the recent revelation that the 1.4.2 JVM is much faster than previous versions, I'm still highly skeptical that a SELECT can outperform a CHAIN. It just doesn't make sense to me, and no test I've run to date has proven me wrong.

Some Gotchas About Testing on the iSeries

There are definitely some issues to watch out for when testing on the iSeries. OS/400 is so much more sophisticated than any other popular operating system out there that everything needs to be taken into account during tests. For example, a poor security design can actually affect performance negatively, yet running multiple jobs simultaneously can actually increase performance, sometimes significantly.

For example, Vern Hamberg always insists that one do a CLRPOOL when testing; and for certain circumstances, that makes a lot of sense. On the other hand, using CLRPOOL means you're removing one of the benefits of OS/400's sophistication: Native I/O is vastly improved when you don't clear your storage pools. In fact, in one of my benchmarks, I ran multiple jobs, each accessing different parts of a file. I found that by starting all the jobs simultaneously, overall performance increased dramatically. It seems that even reading a record close to a record another job will be fetching tends to make access to subsequent records faster.

So What Do We Do?

I think we need to create a comprehensive suite of benchmark tests. I think we need a set of machine-intensive benchmarks like the SPEC tests, which measure everything from disk I/O to computation. We can compare native I/O to SQL to JDBC; math in RPG, COBOL, and Java; program-to-program calls using OPM, ILE, and service programs; RPG-to-Java using various methods; data queue performance; sockets performance; you name it. It would be nice if some of these programs could even run on PCs; the Java tests certainly could, and my guess is that there are some smart C programmers out there who could help with the sockets stuff.

Next, we need another set of programs dedicated to throughput. We could probably get some good direction from the TPC-C tests and then put together some tests based on end-to-end transactions. My guess is that the world would want to see the results of a browser-based technique first, but I think there's a call for thick-client performance as well. We may need to create an entire set of "business tasks" in this case--things like importing item information into an Excel spreadsheet or dumping sales results to a graph in a PDF file.

This is one part of what I plan to accomplish this year. I started this last year with the iSeries Advanced Architecture Initiative (the IAAI), but I haven't been able to devote a lot of time to it. By the time you read this, the IAAI Web site should have a couple of white papers and a number of new tests (including a re-run of Bob Cozzi's EVAL vs. MOVE tests). In addition, I'm going to start really trying to hammer out what a real test environment would look like. It seems to me that we're going to need to test more than just simple file maintenance; we'll need pricing and scheduling and shipping and inventory and all of the things we expect to see in a real, live system.

This will allow us to develop some guidelines and recommendations for architectures based on the workload of a given site. I'm pretty certain that the best answer for a high-volume, low-item-count online storefront will be completely different from the right solution for a long-lead-time, make-to-order shop.

After we've gotten the information on the best techniques for the different requirements types, then we may even try to replicate the results on other platforms. As I noted in the section on machine performance tests, Java could certainly port, and anything else would require support from helpful people (or maybe vendors in the case of conversion or migration tools). All of this together might allow us to have some real, hard numbers to guide IT decision makers in the process of determining their long-term direction in hardware and software.

If you're interested in helping with this process or if you've got some suggestions as to what areas should be tested, please drop a line in the forums or contact me directly:

Joe Pluta is the founder and chief architect of Pluta Brothers Design, Inc. He has been working in the field since the late 1970s and has made a career of extending the IBM midrange, starting back in the days of the IBM System/3. Joe has used WebSphere extensively, especially as the base for PSC/400, the only product that can move your legacy systems to the Web using simple green-screen commands. Joe is also the author of E-Deployment: The Fastest Path to the Web, Eclipse: Step by Step, and WDSC: Step by Step. You can reach him at

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online