In today's increasingly digital economy, data is the fuel that runs your organization's applications, business processes, and decisions.

Editor's note: This article is an excerpt from Chapter 5, "Setting Data Policies, Standards, and Processes," of The Chief Data Officer Handbook for Data Governance (MC Press, 2015).

The Chief Data Officer (CDO) needs to establish a framework to document the decisions from the data governance program. In this chapter, we will discuss how the chief data office can set data policies, standards, and processes.

Prescribe Terminology for Data Policies

A number of terms—such as data policies, standards, processes, guidelines, and principles—are used to describe data decisions. The CDO must prescribe a consistent terminology to describe these data decisions. In this book, we use a standard framework of data policies, standards, and processes. These artifacts may be found in many places, including in people's heads or embedded in broader policy manuals. Some organizations document their data policies in Microsoft Word or Microsoft PowerPoint and load the documents to Microsoft SharePoint or an intranet portal. We begin this chapter with definitions of key terms:

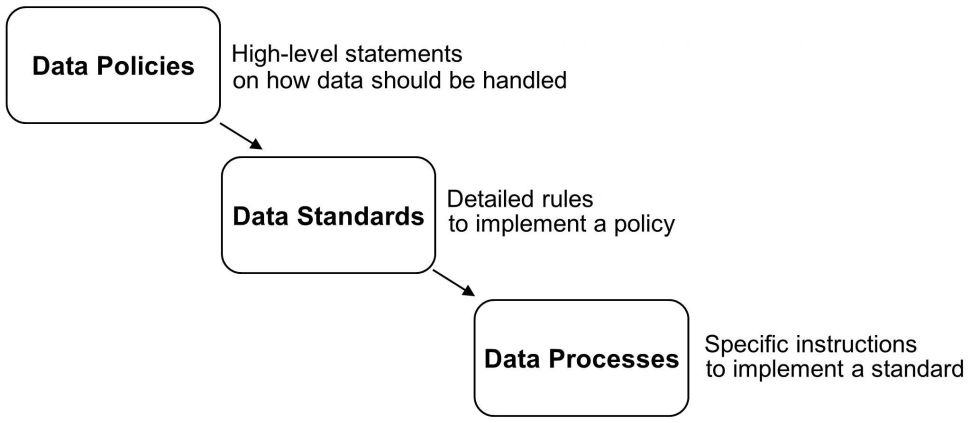

- Data policies—Data policies provide a broad framework for how decisions should be made regarding data. Data policies are high-level statements and need more detail before they can be operationalized. Each data policy may be supported by one or more data standards.

- Data standards—Data standards provide detailed rules on how to implement data policies.

- Data processes—Data processes provide special instructions on how to implement data standards. Each data standard may be supported by one or more data processes.

Data policies, standards, and processes follow a hierarchy, as shown in Figure 1.

Figure 1: Hierarchy of data policies, standards, and processes

Establish a Framework for Data Policies

The CDO must drive a framework for data policies within the following six categories:

- By EDM category—Policies in this category may cover data ownership, data architecture, data modeling, data integration, data security and privacy, master data management, reference data management, metadata management, data quality management, and information lifecycle management.

- By data domain—Policies in this category may cover customer, product, vendor, equipment, and chart of accounts. This category also includes policies to deal with customer duplicates and product hierarchies.

- By critical data element—This data policy includes guidelines to identify critical data elements. Additional policies may cover specific attributes such as U.S. Social Security number, email address, phone number, and product identifier.

- By organization—Policies in this category deal with data issues that are specific to a given function or department, such as risk management or marketing. For example, risk management data policies may deal with the use of data to calculate value at risk. Marketing data policies may address customer segmentation, propensity scores, and contact preferences, such as do not call.

- By business process—Policies in this category may cover customer service and new product introduction. For example, the customer service policy may include a policy that phone representatives need to search for a customer record before creating a new one.

- By big data domain—Policies in this category include the use of big data such as Facebook, Twitter, equipment sensor data, facial recognition, chat logs, and Web cookies. We have called out this category separately because there are a number of unique challenges in dealing with these emerging data types.

The remainder of this chapter will review some examples of data policies, standards, and processes.

Data Quality Management Policy

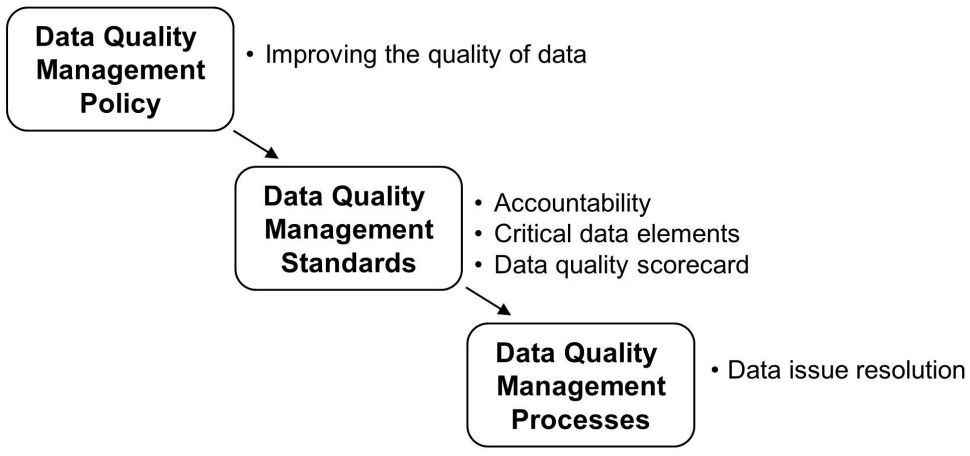

Figure 2 shows a hierarchy of policy, standards, and processes for data quality management. The overall data policy includes standards for accountability, critical data elements, and the data quality scorecard. The accountability data standard also refers to the data issue resolution process.

Figure 2: Data quality management policy, standards, and processes

Policy

A sample policy for data quality management is shown below:

Data quality management is a discipline that includes the methods to measure and improve the quality and integrity of an organization's data. We must adhere to an enterprise-approved process to manage and improve the quality of business-critical data.

Standards

The data quality management standards are as follows:

- Accountability—The data governance team must lead the overall data quality program. However, each data owner must assign one or more business data stewards to manage data quality for key systems and data domains. The responsibilities of the data steward include identifying critical data elements, creating business rules for data profiling, and resolving data issues.

- Critical data elements—The data steward must identify critical data elements, which will be the focus of the data quality program. Critical data elements must constitute not more than 10 to 15 percent of the total attributes in a data domain or data repository. These critical data elements must be used to create business rules that will drive the data quality scorecard. For example, "email address should not be null" is a business rule that relates to the "email address" critical data element.

- Data quality scorecard—The data governance team must manage a data quality scorecard to track key metrics by system and data domain. This scorecard must be updated on a monthly basis and will be circulated to key stakeholders.

Process

Finally, the accountability data standard links to the data issue resolution process. This process states that the lead data steward must track data issues in a log that will be circulated to stakeholders on a periodic basis. This log must track the list of data issues, severity, assignee, date assigned, and current status.

Metadata Management Policy

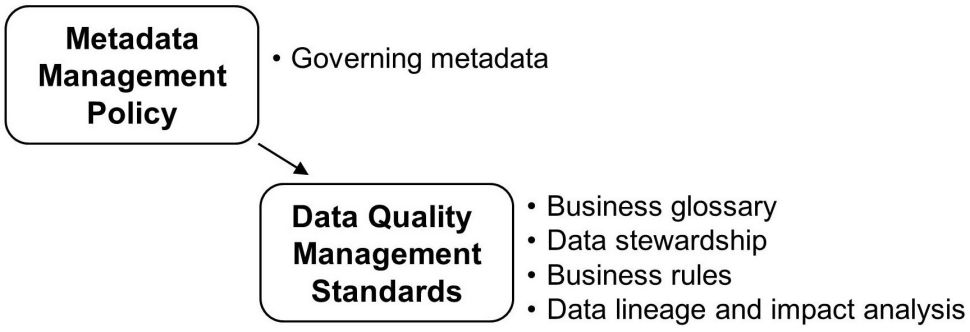

Figure 3 shows a hierarchy of metadata management policy and standards. The overall metadata management policy refers to the data standards for business glossary, data stewardship, business rules, and data lineage and impact analysis. No data processes have been developed in this case.

Figure 3: Hierarchy of metadata management policy and standards

Policy

The metadata management policy is shown below:

We must have quality metadata stored within an enterprise metadata repository to manage business terms, technical metadata, business rules, data quality rules, master data rules, reference data, data lineage, and impact analysis.

Standards

The metadata management policy relates to the following metadata management standards:

- Business glossary—The data governance team must maintain a business glossary with definitions of key business terms. These business definitions must be created and maintained for critical data elements using organizationally adopted naming and definition standards. The business glossary will also contain a data dictionary with the definitions of column and table names for key data repositories.

- Data stewardship—Data owners must assign data stewards to manage business terms and other data artifacts, such as business rules.

- Business rules—Business rules for critical data elements must be documented and kept up to date for each data repository and must be reflected within the business glossary.

- Data lineage and impact analysis—The metadata repository must ingest metadata from key systems, including relational databases, data modeling tools, data integration platforms, reports, analytic models, and Hadoop. The lineage of data elements must be documented and should be up to date. Impact analysis must also be performed.

Reference Data Management Policy

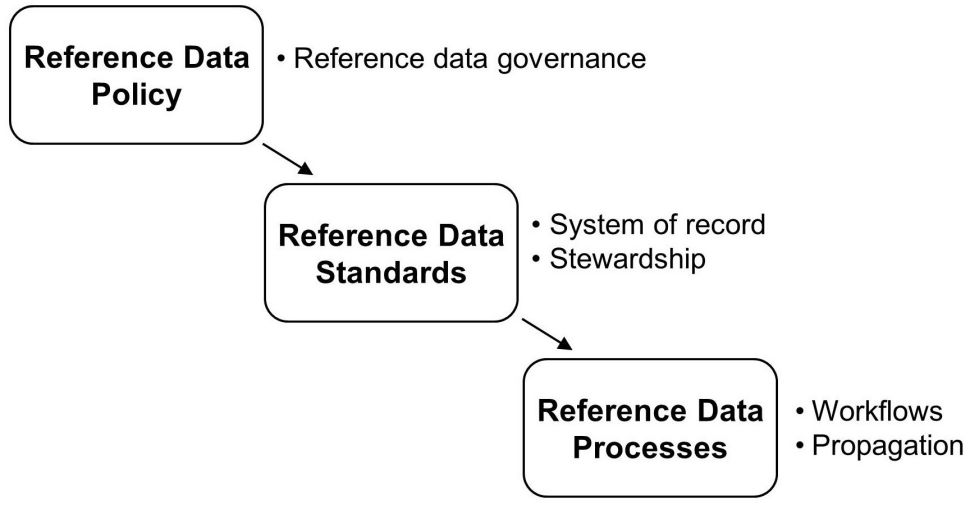

Let's review a hierarchy of reference data management policies, standards, and processes. As shown in Figure 4, the reference data policy relates to the system of record and stewardship standards. The system of record standard, in turn, relates to the data processes for workflows and propagation.

Figure 4: Reference data management policy and standards

Policy

The reference data policy is shown below:

We must identify and manage critical reference data along with the code mappings across critical applications.

Standards

A brief description of the reference data standards is shown below:

- System of record—A system of record shall contain an inventory of code tables along with a list of canonical or standard values and a mapping across systems. For example, "AL" is the state code for Alabama. The reference data system of record shall contain this information along with a mapping to state code "1" in another application because Alabama is the first state in an alphabetical list of states.

- Stewardship—The EDM team must assign code tables to data owners or data stewards who will be responsible for adding, modifying, and deleting code values.

Processes

The system of record standard relates to the following data processes:

- Workflows—Prebuilt workflows must be used to make changes to the code tables. For example, a European country such as "Kosovo" can only be added to the list of country codes based on a recommendation from European finance and approval from global finance.

- Propagation—Reference data changes shall be propagated from the reference data hub to target applications across the enterprise. For example, when "Puerto Rico" is added in the state table, the reference data hub will propagate the change as state code "PR" in one application and as state code "51" in another application.

Email Address Policy

Email address may be a critical data element for companies that want to reach a large number of customers electronically. The following topics form part of an overall data policy for email address:

- Completeness—The email address field should not be null.

- Conformity—Email addresses must be in the format xxxxx@xxx.xxx.

- Uniqueness—Email addresses must not be duplicated. This business rule addresses situations such as insurance agents entering their own email address in place of a customer's email address. The agents did this to avoid the insurer sending direct emails to the customer; however, the master data management (MDM) hub inadvertently matched different customers because they shared the same email address.

- Timeliness—Customer service representatives should validate email addresses whenever they are on the phone with a customer. An email address is considered stale if it has not been validated in the previous 12 months.

- Accuracy—The customer service department should identify any customers with bounced emails. Marketing must validate customer email addresses with external service providers such as Acxiom or Experian CheetahMail.

Facebook Data Policy

As part of the chief data office, the data governance team must also establish data policies for emerging types of data such as social media.

Establish Process for Data Policy Changes

As part of the chief data office, the data governance team must establish a process to manage changes to data policies, standards, and processes. The appropriate body, such as the data governance steering committee or data governance council, must approve these changes.

Editor's note: Learn more with the book The Chief Data Officer Handbook for Data Governance.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online