I’ve been asked this question many times recently. The short answer is yes…and no. Keep reading to get a grasp on these two very important concepts and get to the long answer.

As explained in previous articles (parts 1, 2, and 3), having a lot of data is not, by itself, very helpful. You need tools (like the Hadoop Framework I covered in the last couple of articles) and techniques to extract value from the data. Machine Learning (ML) techniques are a good place to start. However, things get a bit fuzzy when you start reading about this topic, because it’s often mixed up with Artificial Intelligence (AI), and some articles go as far as saying that ML and AI are one and the same. This is not entirely correct. Let me start by explaining what ML is and how you can put it to good use with minimal effort.

What’s Machine Learning, Anyway?

Machine Learning can be defined in a very crude manner as an educated guess: You feed a “program” loads of data and ask it a question related to that data. Note that you can’t give the “program” oranges and ask for lemonade; the question has to be contextualized with the data, because the “Learning” in ML is not exactly like the human concept of learning. Machines can’t (not yet and not in this context, anyway) infer knowledge and express curiosity.

One of the most commonly used examples to illustrate ML is the Titanic wreck. The challenge is as follows: Having the passenger manifest in hand, determine which passengers are more likely to survive an accident. By applying ML algorithms to the data, you can get a highly accurate answer. I said “techniques” and used the word “program” earlier, because you don’t actually write a regular program to process the data and get the answer. Instead, you apply ML algorithms to the data. These algorithms detect patterns in input data, identify similar patterns in future data, and make data-driven predictions. In the Titanic scenario, the passenger manifest contains the status of the passenger (survived/perished), which allows the algorithm to predict what would happen to a passenger in similar circumstances. These algorithms evolve behaviors based on empirical data, so they can accomplish more than programs with specific instructions by adapting to new circumstances. Thus, we can simply say that the machines are “trained” to learn from experiences how to perform a task.

But ML can be used to do much more: Item recommendation (like Amazon’s “Customers who bought this item also bought” functionality), speech (such as Skype’s automatic translation feature) and handwriting recognition, medical diagnosis, sales prediction, and stock exchange operations, just to name a few examples. Check here and here for some really astonishing real life applications of ML.

How Does That Work?

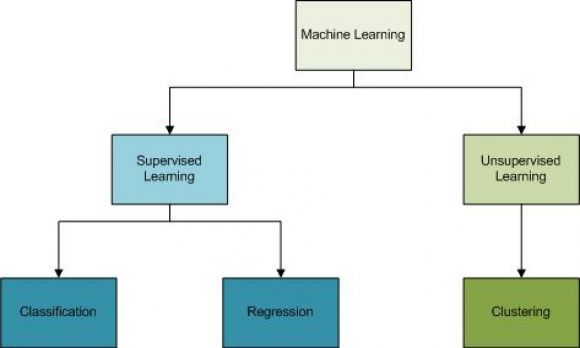

In very broad terms, you insert loads and loads of data into an ML program and get back an answer. The type of answer depends on the type of algorithm used. ML algorithms can be divided into two major categories (Supervised and Unsupervised Learning) and several subcategories (Classification, Regression, and Clustering), as shown in Figure 1.

Figure 1: Machine Learning algorithm types

In Supervised Learning, you feed the algorithm data with known outcomes (more data means better results), such as the passenger manifest of the Titanic with the passenger status (survived or perished), which allows you to get concrete answers. These answers fall into two subcategories:

- Classification, in which you fit an item into a certain “shelf”; going back to the Titanic example, this type of algorithm can determine whether a female adult passenger traveling in first class would survive the wreck, for instance.

- Regression, in which the outcome is not a classification (or a label, if you will) but a numeric value. As you’re probably wondering, this type of algorithm is the one used by the stock exchange bots to determine when to buy or sell stocks.

On the other side of the branch, you’ll find Unsupervised Learning. This type of algorithm works with raw data that doesn’t contain the known outcomes. Because of that, these algorithms can only group, or cluster, the input data into sets that share one or more characteristics. There are a few subcategories, but the most important is Clustering. This is used, for instance, in direct marketing to cluster different clients or prospects into sets and send specifically targeted messages to each set. There’s a third category, not shown in Figure 1, named Semi-supervised Learning, which is a mix of the two. I won’t get into details about it here because it would only confuse you with its specificity.

ML is a very exciting topic because it allows a programmer with minimal knowledge of Python (for instance) to perform extensive data analysis very quickly and effectively. If this article sparks enough interest, I might write a few more articles explaining how to start exploring this brave new world!

From what you’ve read so far, you probably guessed that ML is a broad field of its own and might not be related with AI; this is the “no” part of the short answer. Now let’s get to the “yes” part of that same answer, with an explanation of what AI is.

OK, So That’s Machine Learning! How About Artificial Intelligence?

When you hear “Artificial Intelligence,” probably the first thing that pops up are images of Terminator’s Skynet or 2001: A Space Odyssey’s HAL 9000. Well, we’re not there…yet.

The more prosaic and not science fiction-y version of what AI is, is much older than these flicks. It dates back to the 1950s, when the mathematician Alan Turing conceived the famous Turing Test and introduced it to the world as part of the "Computing Machinery and Intelligence” paper. Turing wondered, Can machines think? Because "thinking" is difficult to define, Turing chose to "replace the question by another, which is closely related to it and is expressed in relatively unambiguous words." Turing's new question was, “Are there imaginable digital computers which would do well in the imitation game?” This poses AI as a science developing technology to mimic humans to respond in a circumstance. In other words, AI involves machines that behave (think) like humans, in general. Because of the rather broad scope this definition entails, the field of AI was broken down into smaller sets of problems, known as the major AI goals (or problems):

- Deduction, Reasoning, Problem-Solving—This problem was solved (pun intended) a long time ago (late 1980s, early 1990s); since that time, we’ve had machines that are capable of deduction, reasoning, and problem-solving in fields as diverse as probability and economics.

- Knowledge Representation—This goal is of paramount importance to AI, because AI needs to amass and relate huge amounts of knowledge to “think.” Without a proper representation system, the information cannot become knowledge. The work on this goal began to bear fruit in the late 1960s, with John McCarthy’s work

- Planning and Scheduling—Just like knowledge representation, planning is a critical part of AI’s “thinking” process. It’s probably the easiest concept to grasp because we’ve all seen (or even written) programs capable of calculating a manufacturing plant’s resource needs for a certain period.

- Machine Learning (ML)—Finally, here’s the “yes” part of the short answer! ML is indeed one of the goals of AI, and that’s easy to explain: In order to “think,” AI needs to make decisions, and ML can be a big help with that process.

- Natural Language Processing—Natural Language Processing (NLP) is what enables humans to communicate more easily with its future machine overlords. Just kidding. NLP is an integral part of the knowledge acquisition process because AI can learn (a lot) from written human knowledge—books, the Internet, publications, and so on. It also enables AI-powered robot companions, like this Watson-powered robot. Being able to communicate with us humans in our language is also important because it allows the AI to adapt to different circumstances—in other words, NLP helps AI to “grow.”

- Perception (Computer Vision) —This goal is one of the most exciting (and useful) to humans. The so-called computer vision—allied with developments in other areas, such as motion and manipulation, planning, and problem-solving—helps AI-powered machines (think robots and such) to perform tasks such as driving cars, replacing humans in dangerous environments, and many more.

- Creativity—This is a very interesting goal and the hardest to achieve, with the exception of the General Intelligence, described next. Getting a machine to paint a picture, for instance, is nothing new – it has been done years ago. But these were machines that created exact replicas; they didn’t actually employ any form of creativity. I’m talking about something different here. I’m talking about AI-powered machines that can paint a fully original painting in the style of Van Gogh or Picasso. That is something new and exciting. The most notable example of this creative flair is Google’s Deep Dream. It’s really, really crazy – and you see it in action here! Naturally, creativity is not limited to art and AI will, some day, achieve levels of creativity matching or even surpassing the best humans are capable of.

- General Intelligence (or strong AI)—The last and furthest away goal is the General Intelligence (think Skynet, HAL 9000, etc.) or, as some (like Elon Musk) call it, “the beginning of the end of the human race.” Achieving general intelligence means that the AI is “alive” in the sense that it’s aware of itself and can reason, plan, understand, see, and so on, like (or even better than) a human. This means that a computer “program” will achieve human levels of intelligence, not in a specific area, but in general, broader terms. This often-called technological singularity is probably years away, but it’ll happen. Many big names of the tech industry are working on that, and it’s only a matter of time. Let’s just hope none of them gives birth to Skynet.

That’s the long answer. In short, Machine Learning is part of Artificial Intelligence (so “yes” to the original question), but it’s a new, exciting, ever-evolving field in itself (so “no” to that very same question).

The introduction (that ended up being a not-so-small one) about Big Data ends with this article. I hope you’ve found it interesting. It’s a big, brave new world that’s worth exploring, so go ahead and keep learning!

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online