Here's an alternative to data queues that works especially well when your primary input is a database file.

I've written two articles on how to use data queues. Data queues are especially practical when you're sending many messages with different layouts between multiple clients and servers. They're easy to create and don't require much in the way of initial design. Simply create the data queue and away you go!

The problem is that you do need extra objects—typically, externally described data structures—to define the layout of the different messages. It's not really a big deal with a complex architecture, but when you need a very directed type of communication, the data queue can be overkill. And that's when you can take advantage of a good old DB2 file.

The Simple Solution

Database tables make the most sense when you have a single-target fire-and-forget application. These usually appear in an inter-platform environment, where an event on one platform needs to be mirrored on another platform. This could be a high availability scenario or a migration environment where legacy events need to be posted to the new business solution (or vice versa). Let's take the simple case of a master file change (probably the best use of this design).

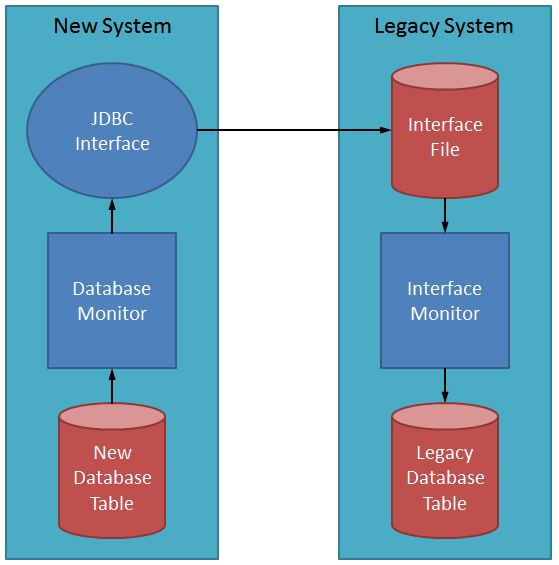

Figure 1: Here's a simple master file synchronization application.

Whenever you have two different systems that need to share the same master data, you have to have some sort of synchronization process. In the old days, that might be done through nightly batch jobs, which simply shipped master files across the network. But in today's world, you often need a much more agile approach in which changes on one machine are mirrored almost automatically on the other machine. Figure 1 shows the simple outline of just such a business flow between a new system and the legacy system that it is communicating with. The new system is considered to be the system of record, meaning that all master file changes are done in the new system. Typically, in this environment, maintenance is disabled on the legacy system and the only way to get changes into the legacy database is by maintaining the corresponding data on the new system. With that methodology, changes clearly have to be transmitted to the legacy system immediately. In that case, the flow is simple: maintenance to the new database table is detected by the database monitor, which in turn uses some sort of interface (for example purposes, I chose a JDBC interface) to write a record to an interface file on the legacy system. In turn, a monitor on the legacy system senses the new record in the interface file and updates the appropriate legacy table. Each file has a different layout, with the interface file being the only table that the two systems have to agree upon. That file contains only those fields from the new system that are pertinent to the legacy system, and it's the only object for which changes need to be coordinated between the two systems. That's why I like using an interface file when the flow between the two processes is narrowly defined.

Where Does EOFDLY Come In?

This article, though, is not just about writing an interface; it's supposed to be about the EOFDLY keyword. Why would we use EOFDLY? Well, here's the main routine for a typical monitor program:

dou %shtdn();

read INTERFACE;

if %eof(INTERFACE);

DoDelay();

iter;

endif;

Process();

enddo;

Very simple and to the point. The primary loop is conditioned by the %shtdn BIF, which returns true when either the job or the subsystem is terminating. Using this technique, you can end the job or the subsystem using OPTION(*CNTRLD) and the monitor can end gracefully, performing any cleanup that might be necessary. This is a good habit to get into with monitor programs. This main routine calls two subprocedures: Process and DoDelay. Process is the part that edits the record and, if the data is valid, maintains the master file (you'll see later that this procedure stays constant regardless of whether we use EOFDLY or not). The piece that interests us here is the DoDelay procedure. I'm not going to go into detail on the routine; you've probably programmed these before. You can call a CL program to do a DLYJOB, or you can use the C libraries to call the sleep() function. Pick your favorite technique to delay the program for a few seconds (or minutes, depending on the application) and then try again. But here's the annoying piece: how do you debug this from the command line? Usually, you'd write a record to your interface and then call the program in debug mode and step through it. That's all fine and good up until the time that you finish processing. Then your program goes into a loop until you use System Request option 2 to cancel the program. Not my favorite technique since it doesn't do any cleanup.

Enter EOFDLY. It really simplifies the code. With EOFDLY, your program looks like this:

dou %eof(INTERFACE);

read INTERFACE;

if not %eof(INTERFACE);

Process();

endif;

enddo;

Notice that I don't use %shtdn. There's a reason for this: with EOFDLY, the operating system does not return control to your program until the RPG runtime senses a termination condition. Instead, when the data management routine senses EOF, it automatically delays the program for however long you indicated on the EOFDLY and then attempts another read. It's that simple. If you get an EOF, you know the job is shutting down, so you end the mainline routine and call your cleanup. So all you do is execute an OVRDBF file with an EOFDLY:

OVRDBF FILE(INTERFACE) EOFDLY(30)

So now, whenever there are no records in the file, the system waits 30 seconds and tries again. But how does this make it easier to debug? To understand that, look at the routine. What happens if you don't specify EOFDLY? As soon as the program sees EOF, it exits the loop. So to test, all you have to do is write one or more records to the interface file and call the RPG without doing the override. The program will process each record, and then when there are no more, it will end gracefully. I've found this simple technique to be really helpful in testing, especially for unit test scenarios: create a test program that writes specific record to the interface file and then call that program followed but the interface monitor to make sure that the records are processed correctly. It's a fantastic technique.

So that's EOFDLY in a nutshell. Like any technique, it's not universally applicable, but where it does make sense it's hard to beat.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online