With a good set of tools, you can do more testing with less effort and lower the costs and risks of application modification.

As a long-time developer, I have to confess that there is no aspect of software development I dislike more than testing. The curse is a blessing, however, in that I have worked hard to develop testing methods that achieve maximum benefit with minimal effort. I manage projects by the following maxims:

- The easier it is to test, the more testing we will do.

- Don't run 10 tests; run 10,000! But make it as easy as running 10!

Therefore, wherever possible, I invest in testing tools and processes and go for large volumes of tests. This is what I refer to as extreme testing.

Categories of Testing and Practical Priorities

Here's a way to categorize testing in a way that is familiar to iSeries developers, along with a general sequence I recommend for addressing each category. You can't do it all at once, so start with the biggest bang for the buck:

|

Test Categories |

|||

|

Testing Type |

Batch Programs |

Interactive Programs |

Service Programs |

|

Regression Testing |

1 |

4 |

2 |

|

New Functionality Testing |

3 |

4 |

3 |

By "service programs," I mean such things as Web services, stored procedures, etc.—externally callable programs or middleware.

This article is too short to review all the categories, but you can learn more by reading Visual Guide to Extreme Testing on the iSeries.

The Quickest and Most Valuable Extreme Testing: Batch-Regression

The technique and goal for batch-regression testing is what I call "high volume parallel testing," which is tremendously facilitated by good tools. Before I go into detail about this, a caution: you might be tempted to first tackle regression testing of interactive programs. I think this is a tactical mistake: Tackle regression testing of interactive programs last—after the other pieces are in place.

Key Components of a Testing Infrastructure

So let's focus on batch-regression testing, where we get the quickest improvements and lay the foundation for the future. All of the capabilities and techniques I discuss below are available and readily deployable in Databorough's X-Data Testing Suite.

There are three principle steps involved with regression testing batch programs:

- Prepare a suitable test database

- Execute tests

- Compare and investigate test results

Quickly Preparing the Optimal Test Database

There are two primary steps involved in test database preparation:

- Extract an optimal subset of data.

- Mask sensitive, private data.

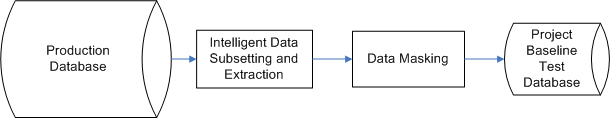

Figure 1: These steps are required for test database preparation. (Click images to enlarge.)

The purpose of intelligent data subsetting is to create relationally coherent records and tables that are smaller than the full production database. Often, you will need many copies of the test database, so it needs to be manageable for disk space and job performance reasons.

For example, you might want a test database comprised of canceled orders. You would extract orders based on a canceled flag and then populate all related tables accordingly: customers, items, code tables, etc. This is a non-trivial task and is only viable with a sophisticated tool that actually knows the complete data model.

The next step is data masking, which alters confidential data to protect sensitive consumer or corporate information. Again, this is non-trivial as the data must maintain a legible appearance to users and relational integrity to associated tables. This is particularly crucial when offsite outsourcing is involved.

Automated High-Volume Parallel Testing: The Regression Payoff

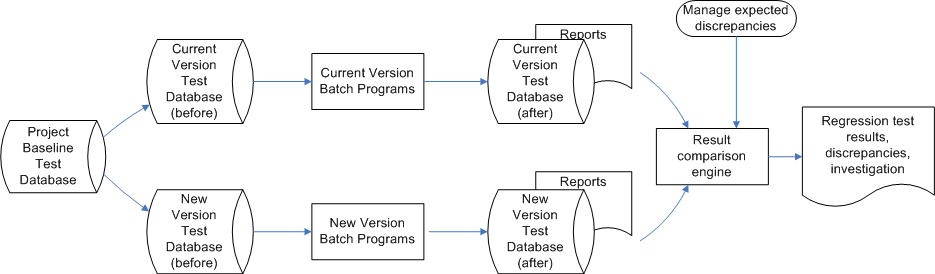

Once a subsetted database is constructed for a project, then it can be duplicated, with one copy for testing with the current software version and another copy for the new software version.

Figure 2: Your subsetted database can be duplicated.

After parallel batch runs, file update results and report output results can be compared. Manually comparing thousands of records, fields, and printed lines for high-volume tests is not only laborious, but also error-prone due to the limits of human attention.

Automated results comparison enables a much greater volume of testing at less cost with near perfect accuracy.

Achieving Extreme Testing

Regression testing is either one of the largest tasks in a project,

or if not done properly, one of the largest risks in a project.

The only way I have survived my career as a developer is that I am mindful of this maxim and employ tools that facilitate testing. This is much more than a personal preference. Studies of thousands of projects have shown that the largest chunk of time in software development is devoted to detecting and correcting defects (Estimating Software Costs, Bringing Realism to Estimating, Capers Jones). A good set of tools will lay the foundation for you to do more testing with less effort and lower both the costs and risks associated with modifying your applications.

as/400, os/400, iseries, system i, i5/os, ibm i, power systems, 6.1, 7.1, V7, V6R1

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online